In a controversial shift, Alphabet Inc., the parent company of Google, has revised its principles on the deployment of artificial intelligence (AI), signaling an openness to potential military applications. The technology giant has eliminated prior assurances that it would refrain from using AI for harmful purposes, including the development of weapons and surveillance systems.

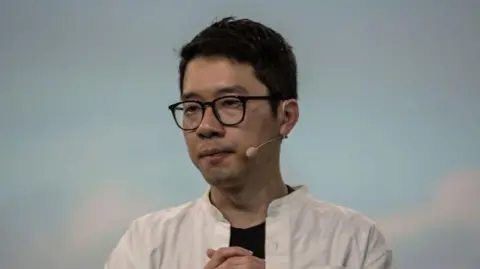

In a recent blog post by Google’s senior vice president James Manyika and CEO of Google DeepMind, Demis Hassabis, the management team introduced the idea that businesses and democratic governments must collaborate to create AI technologies that "support national security." This marks a significant deviation from the company's previous stance, which emphasized its commitment to ethical AI use.

The change reflects ongoing debates among AI scholars and industry experts regarding appropriate governance of this rapidly advancing technology. Many are concerned about the implications of AI on the battlefield and its potential to enhance surveillance capabilities. The blog from Google acknowledged that its initial AI principles, established in 2018, required an update in light of the technology’s evolution. With billions now utilizing AI in various applications, it claims the technology has transitioned from a niche research area into a critical component of everyday life.

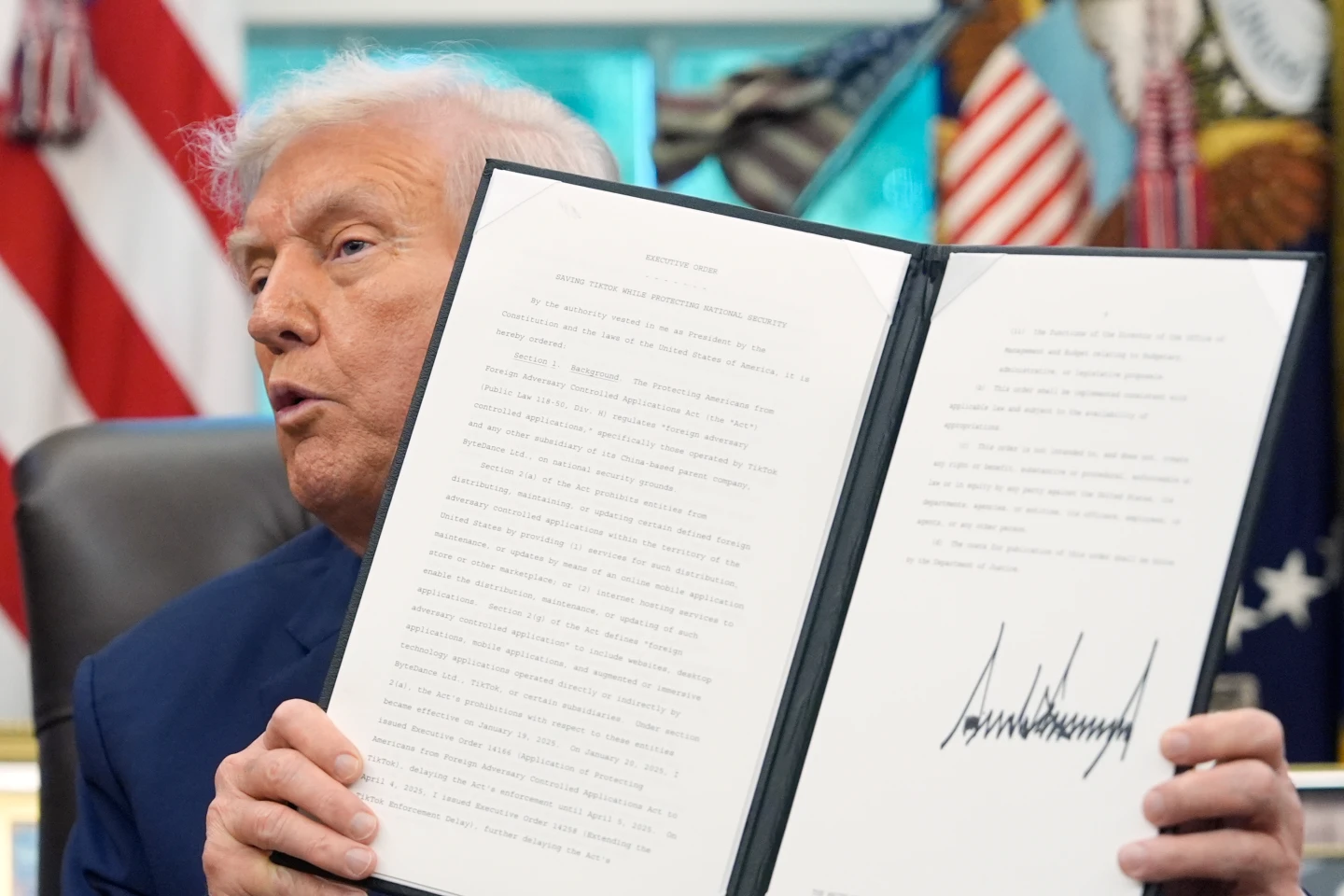

As highlighted in the blog post, the geopolitical landscape and the technology's growing prevalence necessitate this evolution of principles. Manyika and Hassabis insisted that democracies must lead in AI progression, anchored in foundational values such as freedom and respect for human rights. They also called for collaboration among governments and companies that share these principles to foster an AI ecosystem that enhances security and promotes global growth.

The timing of this revelation coincides with Alphabet's recent financial results, which fell short of market expectations and affected its share prices. Despite this, the company announced a substantial investment plan of $75 billion focused on AI development—29% beyond initial estimates—targeting advances in AI infrastructure and applications.

Google's AI platform, Gemini, is now prominently featured in search results and integrated into the company’s Pixel smartphones. This shift toward military and defense ventures recalls earlier internal conflicts within the firm, notably the termination of a contract with the US Pentagon in 2018 amid employee backlash against the ethical implications of "Project Maven."

As Google transitions from its original motto of "don't be evil," to a more ambivalent "do the right thing," the environmental community and ethical AI advocates watch closely, gauging the implications for future societal impacts and the ethical governance of emerging technologies.