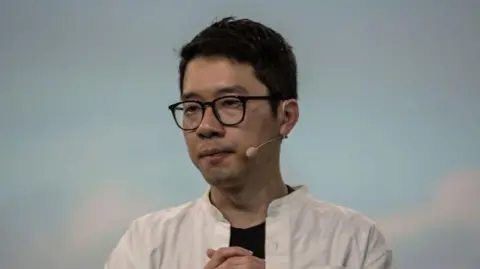

In a recent blog post by Google executives James Manyika and Demis Hassabis, the company defends its new stance, stating the need for collaboration between businesses and democratic governments to ensure that AI supports national security objectives. They argue that as AI technology has transformed into a critical facet of everyday life for billions, the foundational principles must evolve in response to a rapidly changing world.

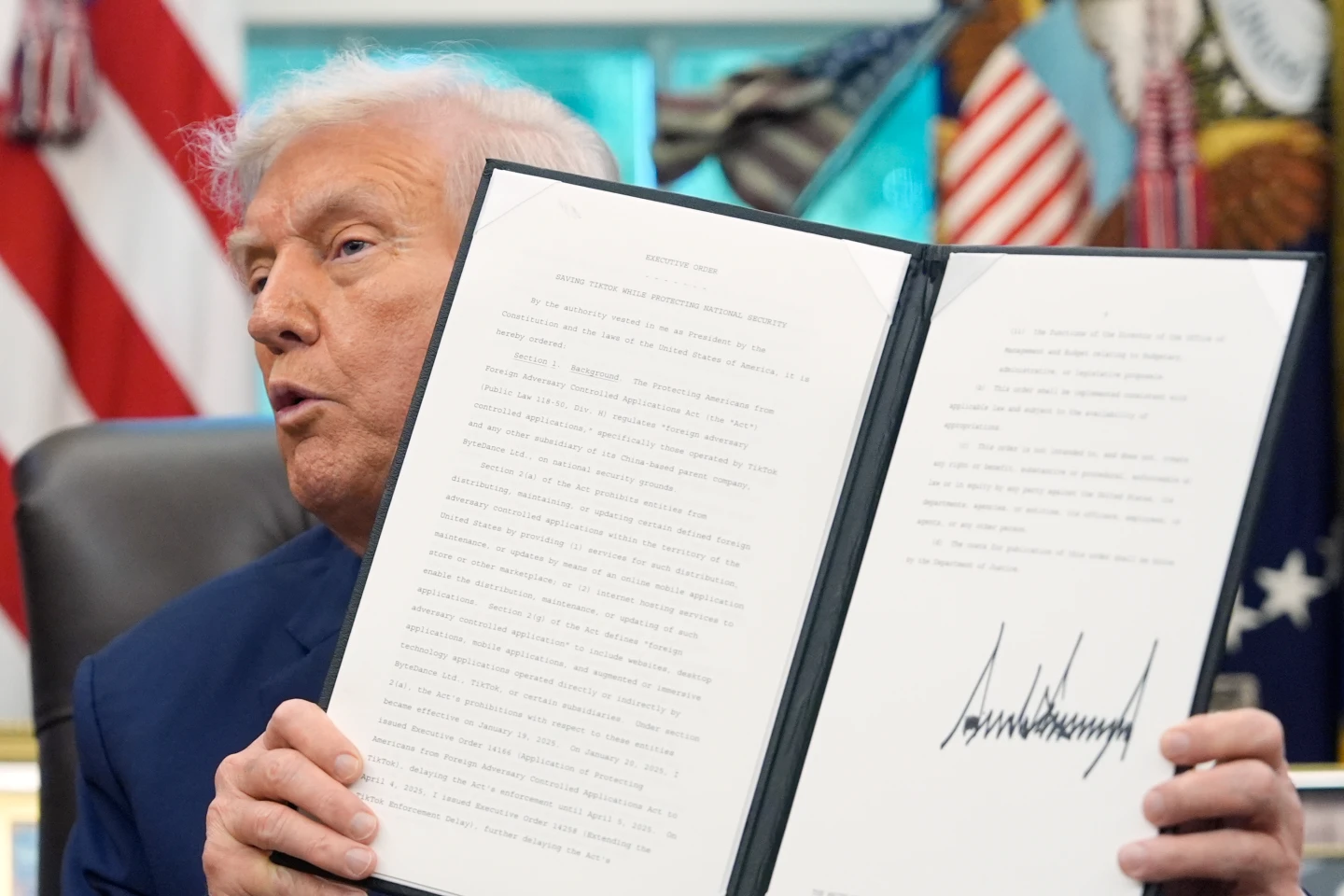

The blog acknowledged the complexities of the current geopolitical climate, emphasizing the necessity for democracies to play a leading role in AI development, guided by values of freedom, equality, and human rights respect. The revised principles come on the heels of Alphabet's underwhelming financial performance, despite its commitment to invest $75 billion in AI this year, marking a 29% increase over analyst expectations.

Historically, Google has faced backlash from employees regarding its military engagements, notably during the controversy over "Project Maven," which aimed to utilize AI in drone operations for the Pentagon. The decision to reset its AI principles reignites debates around the ethical implications of AI applications and reflects the ongoing tension between technological advancement and moral responsibility.

As Google's AI platform Gemini proves instrumental in various applications, it highlights the dual-edge nature of AI's growing capabilities, prompting essential conversations about its future role across multiple domains, including war and peace.

Tech companies are increasingly engaging in significant investments to harness AI's potential, while society grapples with the consequences of its deployment in sensitive areas.

The blog acknowledged the complexities of the current geopolitical climate, emphasizing the necessity for democracies to play a leading role in AI development, guided by values of freedom, equality, and human rights respect. The revised principles come on the heels of Alphabet's underwhelming financial performance, despite its commitment to invest $75 billion in AI this year, marking a 29% increase over analyst expectations.

Historically, Google has faced backlash from employees regarding its military engagements, notably during the controversy over "Project Maven," which aimed to utilize AI in drone operations for the Pentagon. The decision to reset its AI principles reignites debates around the ethical implications of AI applications and reflects the ongoing tension between technological advancement and moral responsibility.

As Google's AI platform Gemini proves instrumental in various applications, it highlights the dual-edge nature of AI's growing capabilities, prompting essential conversations about its future role across multiple domains, including war and peace.

Tech companies are increasingly engaging in significant investments to harness AI's potential, while society grapples with the consequences of its deployment in sensitive areas.